SLAC’s New Computer Science Division Teams with Stanford to Tackle Data Onslaught

Finding ways to handle torrents of data from LSST and LCLS-II will also advance “exascale” computing.

Alex Aiken, director of the new Computer Science Division at the Department of Energy's SLAC National Accelerator Laboratory, has been thinking a great deal about the coming challenges of exascale computing, defined as a billion billion calculations per second. That’s a thousand times faster than any computer today. Reaching this milestone is such a big challenge that it’s expected to take until the mid-2020s and require entirely new approaches to programming, data management and analysis, and numerous other aspects of computing.

SLAC and Stanford, Aiken believes, are in a great position to join forces and work toward these goals while advancing SLAC science.

“The kinds of problems SLAC scientists have are at such an extreme scale that they really push the limits of all those systems,” he says. “We believe there is an opportunity here to build a world-class Department of Energy computer science group at SLAC, with an emphasis on large-scale data analysis.”

Even before taking charge of the division on April 1, Aiken had his feet in both worlds, working on DOE-funded projects at Stanford. He’ll continue in his roles as professor and chair of the Stanford Computer Science Department while building the new SLAC division.

Solving Problems at the Exascale

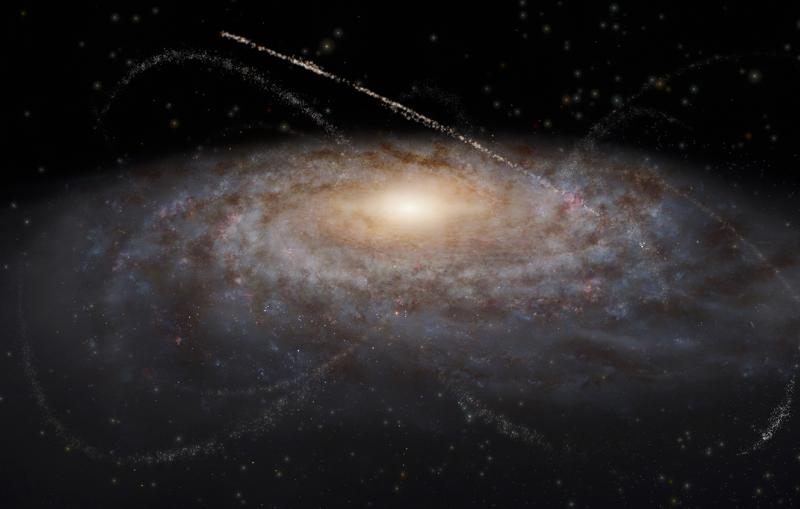

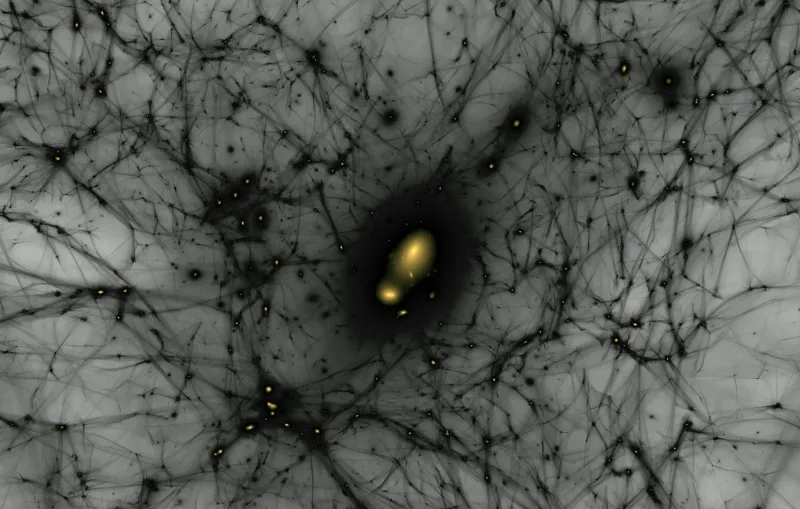

SLAC has a lot of tough computational problems to solve, from simulating the behavior of complex materials, chemical reactions and the cosmos to analyzing vast torrents of data from the upcoming LCLS-II and LSST projects. SLAC’s Linac Coherent Light Source (LCLS), is a DOE Office of Science User Facility.

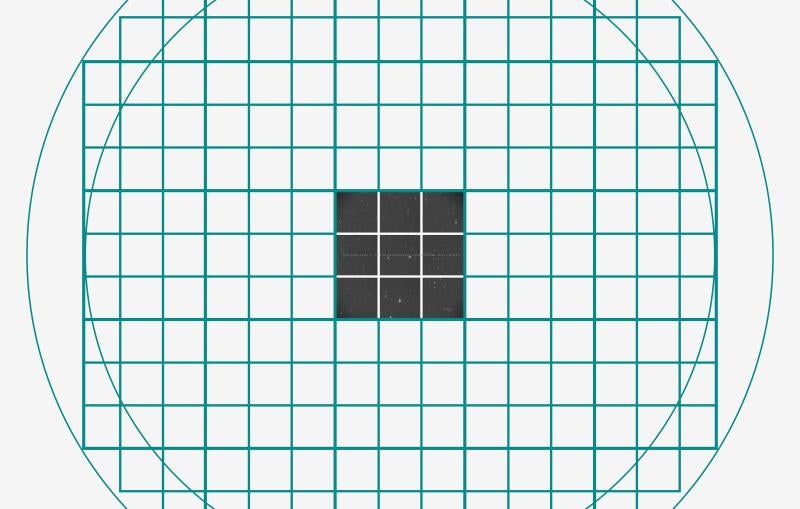

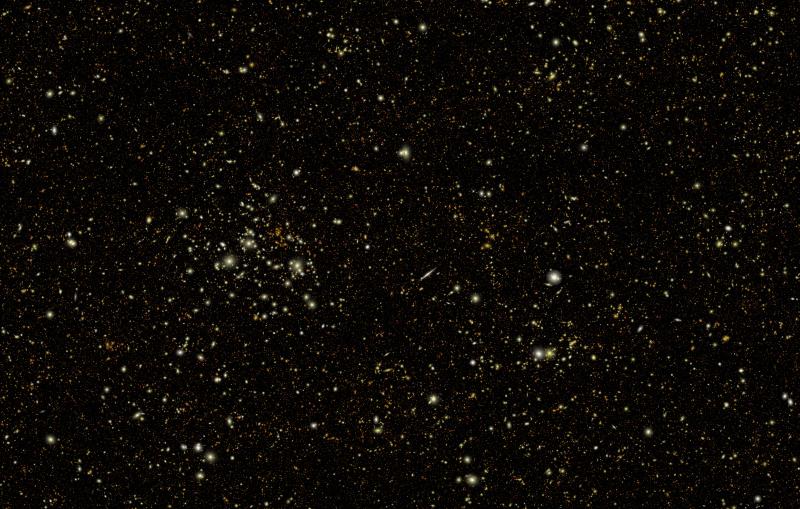

LSST, the Large Synoptic Survey Telescope, will survey the entire Southern Hemisphere sky every few days from a mountaintop in Chile starting in 2022. It will produce 6 million gigabytes of data per year – the equivalent of shooting roughly 800,000 images with a 8-megapixel digital camera every night. And the LCLS-II X-ray laser, which begins operations in 2020, will produce a thousand times more data than today’s LCLS.

The DOE has led U.S. efforts to develop high-performance computing for decades, and computer science is increasingly central to the DOE mission, Aiken says. One of the big challenges across a number of fields is to find ways to process data on the fly, so researchers can obtain rapid feedback to make the best use of limited experimental time and determine which data are interesting enough to analyze in depth.

The DOE recently launched the Exascale Computing Initiative (ECI), led by the Office of Science and National Nuclear Security Administration, as part of a broader National Strategic Computing Initiative. It aims to develop capable exascale computing systems for science, national security and energy technology development by the mid-2020s.

Staffing up and Enhancing Collaborations

On the Stanford side, the university has been performing world-class computer science – a field Aiken loosely describes as, “How do you make computers useful for a variety of things that people want to do with them?” – for more than half a century. But since faculty members mainly work through graduate student and postdoctoral researchers, projects tend to be limited to the 3- to 5-year lifespan of those positions.

The new SLAC division will provide a more stable basis for the type of long-term collaboration needed to solve the most challenging scientific problems. Stanford computer scientists have already been involved with the LSST project, and Aiken himself is working on new exascale computing initiatives at SLAC: “That’s where I’m spending my own research time.”

He is in the process of hiring four SLAC staff scientists, with plans to eventually expand to a group of 10 to 15 researchers and two initial joint faculty positions. The division will eventually be housed in the Photon Science Laboratory Building that’s now under construction, maximizing their interaction with researchers who use intensive computing for ultrafast science and biology. Stanford graduate students and postdocs will also be an important part of the mix.

While initial funding is coming from SLAC and Stanford, Aiken says he will be applying for funding from the DOE’s Advanced Scientific Computing Research program, the Exascale Computing Initiative and other sources to make the division self-sustaining.

Two Sets of Roots

Aiken came to Stanford in 2003 from the University of California, Berkeley, where he was a professor of engineering and computer science. Before that he spent five years at IBM Almaden Research Center.

He received a bachelor’s degree in computer science and music from Bowling Green State University in 1983 and a PhD from Cornell in 1988. Aiken met his wife, Jennifer Widom, in a music practice room when they were graduate students (he played trombone, she played trumpet). Widom is now a professor of computer science and electrical engineering at Stanford and senior associate dean for faculty and academic affairs for the School of Engineering. Avid and adventurous travelers, they have taken their son and daughter, both now grown, on trekking, backpacking, scuba diving and sailing trips all over the world.

The roots of the new SLAC Computer Science Division go back to fall 2014, when Aiken began meeting with two key faculty members – Stanford Professor Pat Hanrahan, a computer graphics researcher who was a founding member of Pixar Animation Studios and has received three Academy Awards for rendering and computer graphics, and SLAC/Stanford Professor Tom Abel, director of the Kavli Institute for Particle Astrophysics and Cosmology, who specializes in computer simulations and visualizations of cosmic phenomena. The talks quickly drew in other faculty and staff, and led to a formal proposal late last year that outlined potential synergies between SLAC, Stanford and Silicon Valley firms that develop computer hardware and software.

“Modern algorithms that exploit new computer architectures, combined with our unique data sets at SLAC, will allow us to do science that is greater than the sum of its parts,” Abel said. “I am so looking forward to having more colleagues at SLAC to discuss things like extreme data analytics and how to program exascale computers.”

Aiken says he has identified eight Stanford computer science faculty members and a number of SLAC researchers with LCLS, LSST, the Particle Astrophysics and Cosmology Division, the Elementary Particle Physics Division and the Accelerator Directorate who want to get involved. “We keep hearing from more SLAC people who are interested,” he says. “We’re looking forward to working with everyone!”

For questions or comments, contact the SLAC Office of Communications at communications@slac.stanford.edu.

SLAC is a multi-program laboratory exploring frontier questions in photon science, astrophysics, particle physics and accelerator research. Located in Menlo Park, Calif., SLAC is operated by Stanford University for the U.S. Department of Energy's Office of Science.

SLAC National Accelerator Laboratory is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States, and is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.